Load Testing with K6

Implementing K6 for load testing user journeys with flexible configuration

At my current job we were looking at new ways to load test user journeys.

As some of our code base is nodejs we looked at tools that would allow us to dog feed into existing libraries and make it as easy as possible to add tests.

We found K6 allowed us to write ES6 JS.

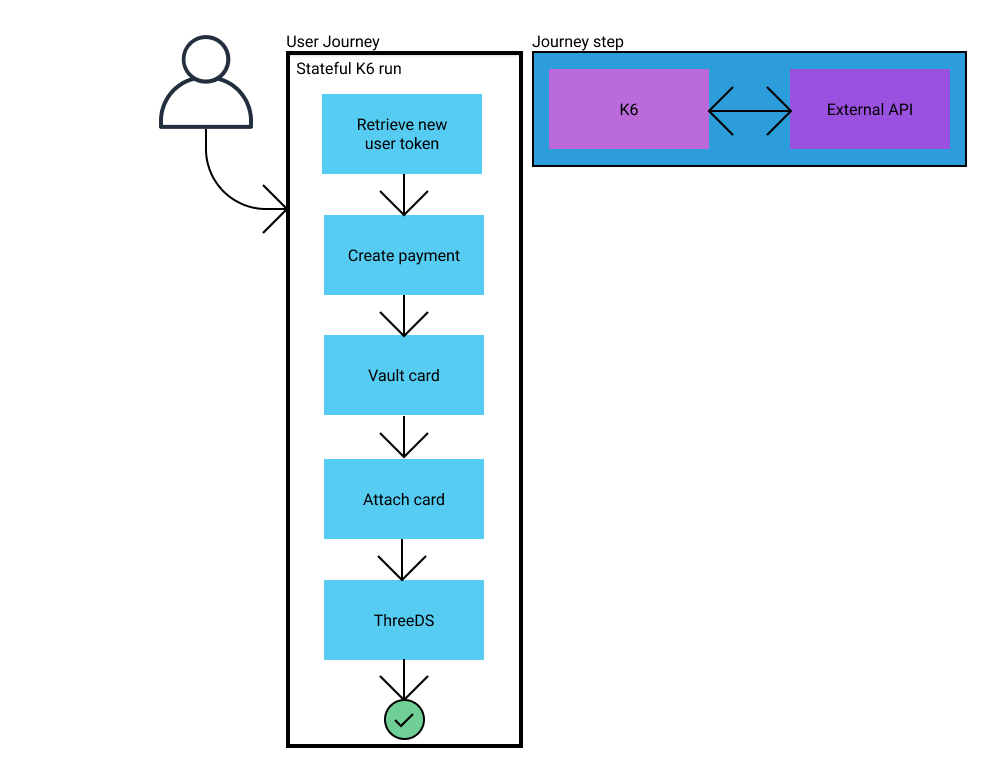

A journey represents a list of requests for a user story, they might or might not belong to the team as it represents a whole interaction.

An example would be:

Most APIs here belong to our teams but we do have external dependencies like Merchant Acquirers e.g. Worldpay

Because of this we run each journey under two options, internal and external.

Internal will use specific data to route into fake third party APIs which will have the same average and 90pct response times as the real ones.

External will use the actual staging or production third party API.

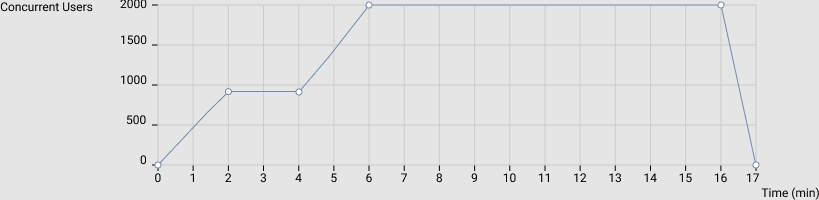

With the K6 configuration we can have a flexible ramp up and slow down.

{

"stages": [

{

"duration": "2m",

"target": 800

}, {

"duration": "2m"

}, {

"duration": "2m",

"target": 2000

}, {

"duration": "10m"

}, {

"duration": "1m",

"target": 0

}

],

"noConnectionReuse": true

}

K6 has the concept of Virtual Users - each user (actor) is somewhere along the journey and a specific amount of users is running at any given time.

When developing a new feature a new “k6 journey” is also added as well as the above configuration.

We run the new journey along with all existing journeys in the CI for regression purposes.

By often running the load tests alongside the new feature we ensure some of the performance requirements - even though that they don’t mimic production.

Conclusion

This implementation allowed us to integrate load testing directly into our CI pipeline, providing early performance feedback during development. The dual-mode approach (internal vs external) proved valuable for isolating performance issues to our own systems versus third-party dependencies.

Key benefits included:

- Early Detection: Performance regressions caught before production

- Flexible Configuration: Easy adjustment of load patterns per journey

- Developer-Friendly: ES6 syntax aligned with our existing codebase

Future improvements could include more sophisticated monitoring of individual API endpoints and better correlation with production traffic patterns.