Shopify Syncing with Webhooks and logging with AWS Athena and S3

original posted on medium

Introduction Link to heading

At my current project I’m developing an application that uses data from Shopify for reporting, logistics, and others.

To keep the application and Shopify in sync we trust Shopify’s Product and Order webhooks.

Syncing Link to heading

We trust Shopify is our single source of truth and our application data will be eventually consistent by transferring those webhooks onto a queue ( Redis) where the webhooks’ data will transfer to workers that will process it into our production database.

- The queue will retry or error on any import issues.

- And there is a regular scheduled import to make sure no updates miss and to handle reconciliation.

Performance Requirements Link to heading

During high throughput moments (e.g. flash sales) we want to have best performance so to try and keep the database as lean as possible for user queries we:

- Store the necessary fields from webhooks.

- Store the final (current) status of an order or product and overwrite any intermediate create and update requests (e.g. basket modification webhooks).

- Process and replace fields as required as well as generating entries in other tables (risk, reporting, tracking user actions, etc).

Any changes to the application rely on creating schema migrations and rerunning the data importing for larger intervals — Processing is idempotent (including reporting) so this can be ran as often as required.

Logging Use Cases and Requirements Link to heading

So far we’ve been fortunate not to have issues but we do have to go back and look for errors in orders and products and be aware of what was the state at a specific time.

A good example is an order created and paid with one address and then modified to have a new delivery address. We might want to compare the two of them.

Another scenario is a product which had its inventory updated more than once in a row and we know the end total amount and not all the actions that got us there.

These are specific use cases that are not of great use for the day to day application user and keeping them in the database would hinder its performance greatly.

As the current development team is small we looked into third party logging platforms that would help us to do this but we had issues that keep us from going ahead of any of them:

- None have the option to keep ALL logs forever, these are not application logs but actual sale data.

- The pricing options to keep all the data required were high.

- Poor options to retrieve and restore data.

- We didn’t want to be locked down to yet another platform.

AWS S3 Logging Link to heading

Because of the previous requirements we decided to go with logging the webhooks on S3.

- Pricing was reasonable.

- Good performance and reliability.

- Already using the AWS platform.

Easy to integrate with S3 at that point.

We required the application webhook receiving endpoints to queue onto a low priority S3 worker as well as the current processing workers.

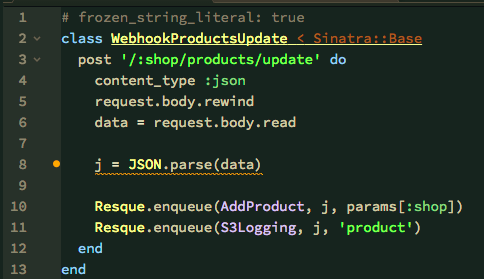

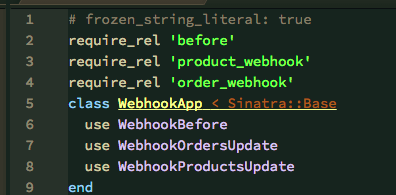

Webhook app used to authenticate POST requests from Shopify and hand them over to Redis

Logs upload based on application/environment/type and named according to their unique Shopify ID and update timestamp in UNIX format.

ENVIRONMENT-logging.application.com/TYPE/SHOPIFYID_TIMESTAMP.json

production-logging.application.com/product/123_1499253839.jsonThe content of the file would be the original JSON content of the webhook as seen in the documentation.

Querying the logs Link to heading

The main reason preventing us to go with S3 sooner was the difficulty to query the logs.

On previous occasions I’ve decided on the ELK stack but this time the amount of logs required and storage requirements took it out of the equation.

Trying to query raw S3 logs was painful and keeping a logging platform just to access it a few times a month seemed expensive costwise and hourwise due to maintenance.

Finally last year AWS came out with Athena which allowed us to do the following.

- Use its query interface when required. Only paying for the queries used. As we don’t automate Athena’s use this is inexpensive.

- Use SQL queries (based on Apache Hive schema semantics and ran on Apache Presto) so not having to add another language to the list of requirements for both developers and database users.

One of the comments I had to this blog post was that Athena allows to use Apache Parquet to compress and handle the data (see this article) but in our scenario we didn’t have an issue with storage pricing and prefered to keep the solution as simple as possible.

Athena Schema and examples Link to heading

Our current Athena/Hive schema for Shopify Product is as follows:

CREATE EXTERNAL TABLE IF NOT EXISTS Database.Webhooks

(`id`bigint,`title`string,`body_html`string,

`vendor`string,`product_type`string,`created_at`string,`handle`string,`updated_at`string,

`published_at`string,`template_suffix`string,`published_scope`string,`tags`string,

`variants`array<struct<`id`: bigint,`product_id`: bigint,`title`: string,`price`: string,`sku`: string,`position`: int,`grams`: int,`inventory_policy`: string,

`compare_at_price`: string,`fulfillment_service`: string,`inventory_management`: string,`option1`: string,`option2`: string,`option3`: string,`created_at`: string,`updated_at`: string,`taxable`: boolean,`barcode`: string,`image_id`: string,`inventory_quantity`:int,`weight`: float,`weight_unit`: string,`old_inventory_quantity`: int,`requires_shipping`: boolean >> )

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

WITH SERDEPROPERTIES ( 'serialization.format' = '1' )

LOCATION 's3://production-logging.application.com/product/' T

BLPROPERTIES ('has_encrypted_data'='false');Any other Shopify client can take advantage of this information.

As for querying for a specific Product we can then use:

select \* from webhooks where id = 123;Important to be aware that each log even in JSON format can be in one line! The only processing done to the webhooks’ JSON was removing all newline characters.

Current issues and future work Link to heading

Having a reliable log of all webhooks and being able to query to respond to any question has helped us to track down those really pesky bugs… But there is still work to do that we’d like to review:

- Being able to cross join on the Product Variants — Apache Hive has the Lateral View which allows this, doesn’t seem to be in Athena yet.

- Being able to cast the date strings onto UTC Timestamps — This might be possible, it hasn’t been necessary yet.

- Casting price as a Money type — Also might not be done as Shopify is sending Price as a string.